It is true that for training a lot of the parallalization can be exploited by the GPUs resulting in much faster training. If you are doing any math heavy processes then you should use your GPU.

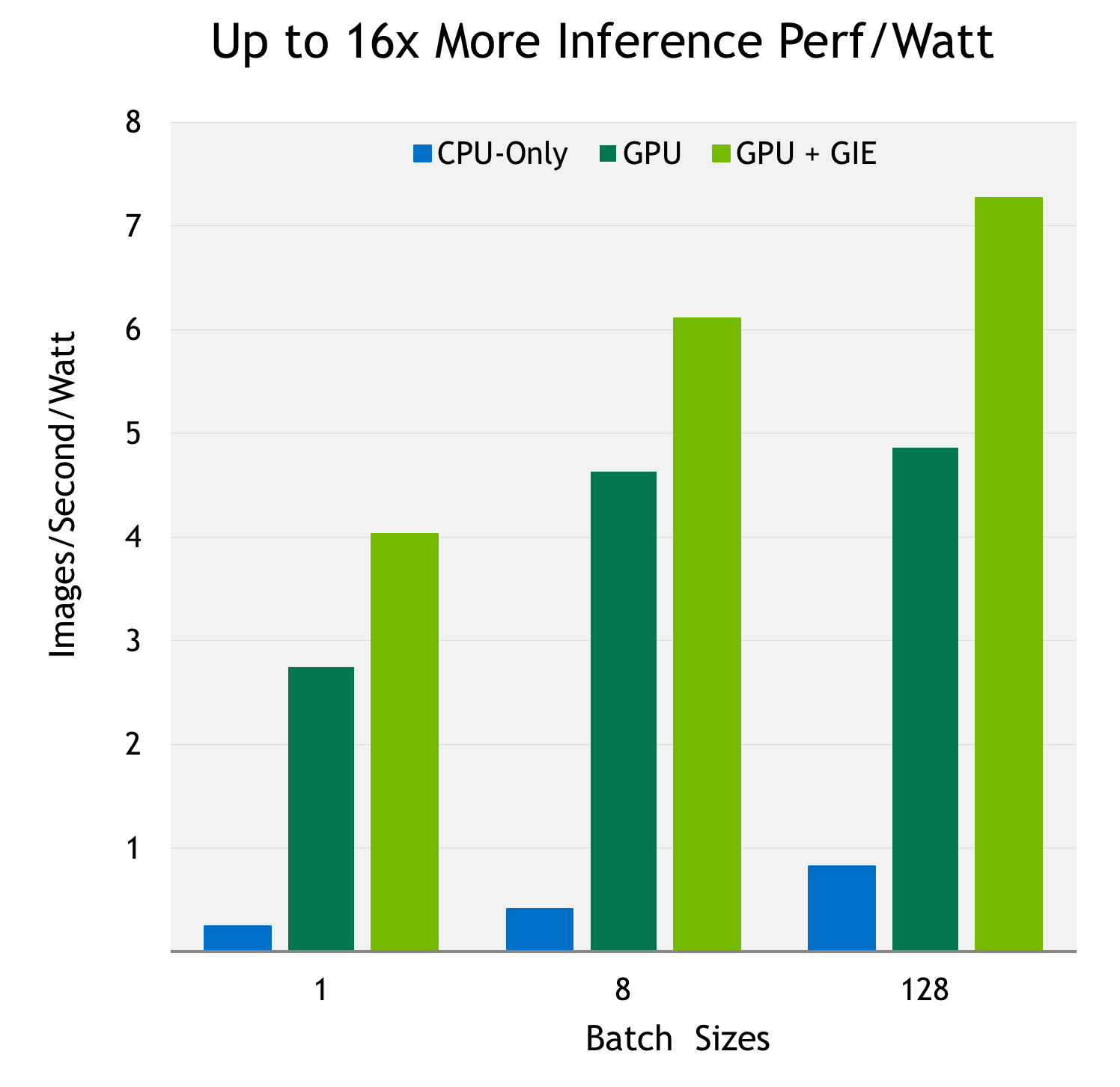

Production Deep Learning With Nvidia Gpu Inference Engine Nvidia Technical Blog

GPUs are definitely the preferred choice for larger data sets and scales requiring high-performance and a higher number of computations.

. Two of the most popular choices are NVIDIA and AMD. About 5 ms 23 ms 4 PCIe lanes CPU-GPU transfer. About 9 ms 45 ms Thus going from 4 to 16 PCIe lanes will give you a performance increase of roughly 32.

As most of the computation involved in Deep Learning are Matrix operations GPU outperforms conventional CPU by running the same as parallel operations. The model is trained more quickly with the help of the graphics processing unit. This answer is not useful.

Show activity on this post. Consumer GPUs are not appropriate for large-scale deep learning projects but can offer an entry. About 2 ms 11 ms theoretical 8 PCIe lanes CPU-GPU transfer.

A quick video to compare I7 7700HQ and GTX 1060 for deep learningRAM. NVIDIA Tesla v100 Tensor Core is an advanced data center GPU designed for machine learning deep learning and HPC. The high bandwidth and easy to use registers make the graphics card a lot faster than a computer.

GPU should be able to support the. About 9 ms 45 ms Thus going from 4 to 16 PCIe lanes will give you a performance increase of roughly 32. If it does then you just have to run the code and it will automatically choose to run the computation in the GPU if you are using keras.

It is powered by NVIDIA Volta technology which supports tensor core technology specialized. You should also make sure to use all the cores of your machine. So do not waste your money on PCIe lanes if you are using.

About 5 ms 23 ms 4 PCIe lanes CPU-GPU transfer. I checkout my GPU usage with nvidia-smi here is the result and the process of the example code outputs I think the example code does use GPU to run the script but it is incredible slow I compared the examplesec it is less than the tensorflow website but not so much in the example script they just use CPU to store some checkout point and summary files So I am a. Data center GPUs are the standard for production deep learning implementations.

сan do multiple calculations in parallel. To compute the data efficiently a GPU is an optimum choice. Have a large number of cores.

High-Performance GPUs With VEXXHOST If your organization needs deep learning for large amounts of. If you are using any popular programming language for machine learning such as python or MATLAB it is a one-liner of code to tell your computer that you want the operations to run on your GPU. For Inference this parallalization can be way less however CNNs will still get an advantage from this resulting in faster inference.

Is GPU or CPU more important for machine learning. The Deep Learning Model can be trained efficiently with. GPU Technology Options for Deep Learning Consumer-Grade GPUs.

216 milliseconds ms 16 PCIe lanes CPU-GPU transfer. 8 PCIe lanes CPU-GPU transfer. The NVIDIA Tesla V100 is a Tensor Core enabled GPU that was designed for machine learning deep learning and high performance computing HPC.

However if you use PyTorchs data loader with pinned memory you gain exactly 0 performance. The selection of GPU depends upon the performance required by the Deep Learning project. The GPU should get selected because the project will run for a long time.

The larger the computations the more the advantage of a GPU over a CPU. Training a model in deep learning requires a large dataset hence the large computational operations in terms of memory. Moreover the GPU for deep learning is smaller in comparison to the CPU but basically it has more logical cores.

So we are able to conclude that GPUs are inherently best-optimized equipment for model learning because they have the following features. GPUs are the heart of Deep learning Build. Forward and backward pass.

Its powered by NVIDIA Volta architecture comes in 16 and 32GB configurations with 149 teraflops of performance and 4096-bit memory bus and offers the performance of up to 100 CPUs in a single GPU. NVIDIA NVIDIA is a popular option because of the first-party libraries it provides known as the CUDA toolkit. They decide the performance gain that you get during training of neural networks.

On-Premises GPU Options for Deep Learning When using GPUs for on-premises implementations multiple vendor options are available. The deep learning model can take a long time to be trained. To achieve high accuracy deep learning requires large amounts of labeled data and therefore substantial computing power here is where we get to decide which processing unit can be used depending.

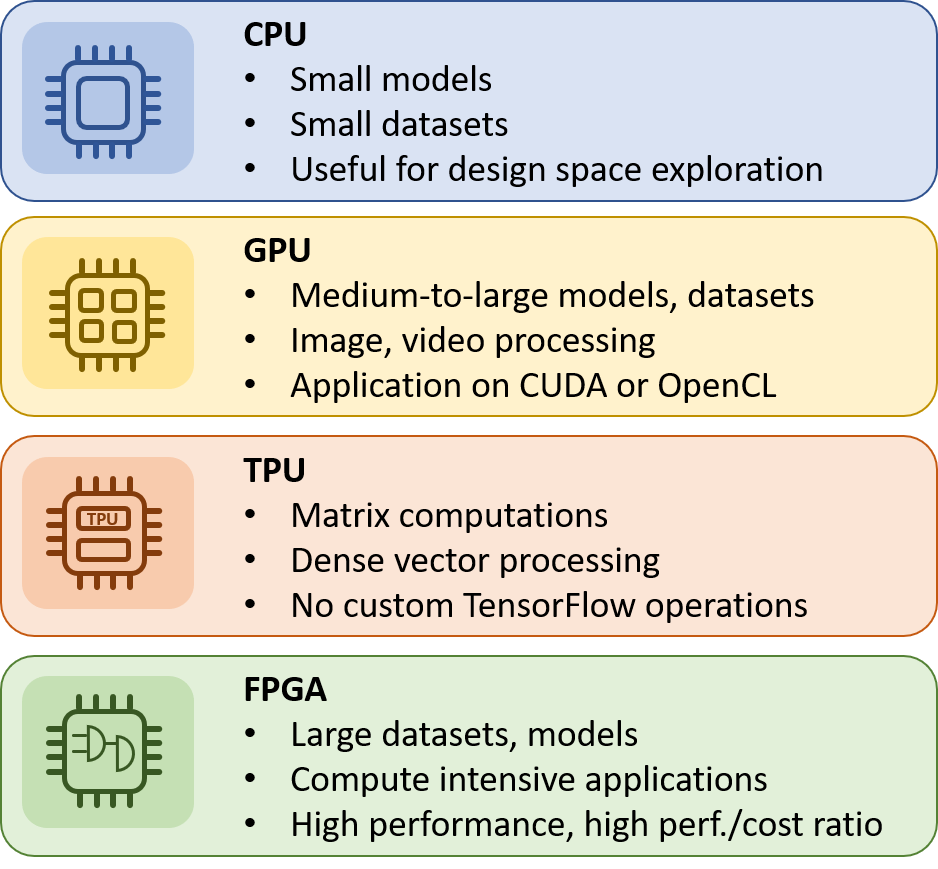

Cpu Gpu Fpga Or Tpu Which One To Choose For My Machine Learning Training Inaccel

The Best Gpus For Deep Learning In 2020 An In Depth Analysis

The Best Gpus For Deep Learning In 2020 An In Depth Analysis

0 Comments